Guest Post

Valentina is a Microsoft Specialist, focusing on Azure OpenAI. She has been working with Azure OpenAI and Azure SQL lately and in this post she’s sharing how to start using Azure OpenAI and Azure SQL or SQL Server to build next-gen solutions. You can find more of her articles in her blog: https://medium.com/@valentinaalto. Thanks Valentina!

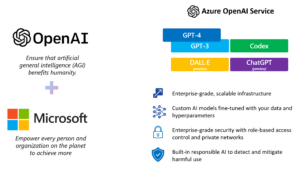

In January 2023, Microsoft announced the General Availability of the Azure OpenAI Service (AOAI), which allows Azure customers to access OpenAI models directly within their Azure subscription and with their own capacity.

There are four models families available at the moment:

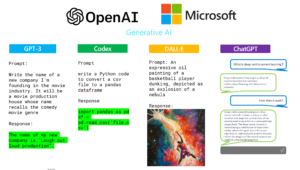

- GPT: Generative Pre-trained Transformers are powerful generative models which are best suited for understanding and generating natural language.

- Codex: those are a fine-tuned version of GPT which has a specific verticalization on understanding and generating programming languages.

- DALL-E: this is the model which is able to generate images starting from natural language.

- ChatGPT: this is the engine behind ChatGPT. As for now, the ChatGPT API is available as GPT-3.5-turbo and GPT-4.

In this post, we will see how you can use those models to query your SQL tables. The idea is that of generating SQL queries using Azure OpenAI models’ API with Python code.

Prerequisites

- An Azure subscription – Create one for free.

- Access granted to Azure OpenAI in the desired Azure subscription. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access.

- An Azure OpenAI resource with a model deployed. For more information about model deployment, see the resource deployment guide.

- An Azure SQL Database – you can follow the instructions here.

- Python 3.7.1 or later versions.

- LangChain library installed (you can do so via pip install langchain)

Quickstart Demo

The first thing we want to do is import one of our SQL tables into a pandas dataframe. To do so, we can use the pyodbc library in Python, which you can easily install via pip install pyodc. To connect with my Azure SQL DB, I used an ODBC connection. You can find the information endpoints under the “Connection Strings” tab of your SQL DB instance:

import pyodbc

import pandas as pd

server = 'servername'

database = 'AdventureWorks'

username = 'yourusername'

password = 'databasename'

cnxn = pyodbc.connect("Driver={ODBC Driver 18 for SQL Server};Server=tcp:server,1433;Database=database;Uid=username;Pwd=password;Encrypt=yes;TrustServerCertificate=no;Connection Timeout=30;")

cursor = cnxn.cursor()

# select 10 rows from SQL table to insert in dataframe.

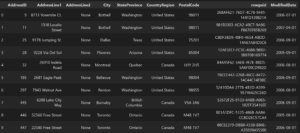

query = "select * from [SalesLT].[Address];"

df = pd.read_sql(query, cnxn)

print(df.head(10))The result will be something like:

Note: while creating my Azure SQL DB, I’ve also created the sample database AdventureWorks. You can use any SQL table you wish.

The next thing we need to do is initialize our Azure OpenAI model. To do so, we will use LangChain, a Python library that makes it easier to combine the power of Large Language Models (LLMs) with the logic of an application.

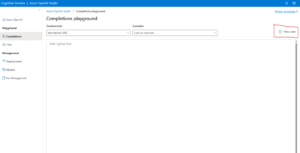

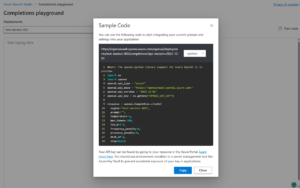

To initialize your model, you also need to provide your keys and endpoint for your Azure OpenAI models’ (you can find them under the “Keys and Endpoints” tab in your Azure OpenAI resource) as well as the name of your deployment and model. You can find all this information from the Azure OpenAI playground when exporting your code. Click on the “View Code” link on the top right:

And then get the needed informations from the sample code:

For this demo, I’ve used a text-davinci-003 model.

Let’s have a look at the code:

# Import Azure OpenAI

from langchain.llms import AzureOpenAI

import openai

import osos.environ["OPENAI_API_TYPE"] = "azure" os.environ["OPENAI_API_KEY"] = "xxxx" os.environ["OPENAI_API_BASE"] = "xxxx" os.environ["OPENAI_API_VERSION"] = "2022-12-01"llm = AzureOpenAI(deployment_name="text-davinci-003", model_name="text-davinci-003")

Finally, we can use the pandas DataFrame agent in LangChain and start asking questions against our table:

from langchain.agents import create_pandas_dataframe_agent

agent = create_pandas_dataframe_agent(llm, df, verbose=True)

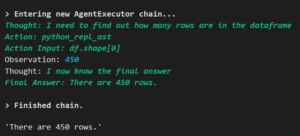

agent.run("how many rows are there?")As you can see, the agent, powered by Azure OpenAI models, is able to provide the right output. Also, thanks to the LangChain Agent, it is also able to show which is the thought process behind and which are the queries that it used to retrieve the answer.

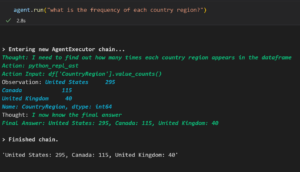

Let’s try with other queries. For example, let’s check “what is the frequency of each country region”:

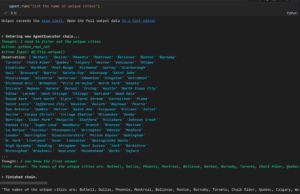

Another interesting query – just to check how “clever” the AI is, is to ask for the distinct values of city names available in our database:

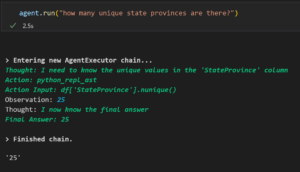

Or maybe ask for the count the unique items. State and Provinces in this sample:

Azure OpenAI models are powerful generative AI tools that can be infused into custom applications, thanks to their APIs. With LangChain, the framework to manage those APIs is easier and allows for better interaction with the context, using any data stored in Azure SQL tables.

The post Make data more human with Azure OpenAI and Azure SQL appeared first on Azure SQL Devs’ Corner.